The Arc of Engineering

[Copyright 2008 by Bruce F. Webster. All rights reserved. Adapted from Surviving Complexity (forthcoming).]

And so, from hour to hour, we ripe and ripe,

And then, from hour to hour, we rot and rot;

And thereby hangs a tale.— William Shakespeare, As You Like It, Act II, Scene vii.

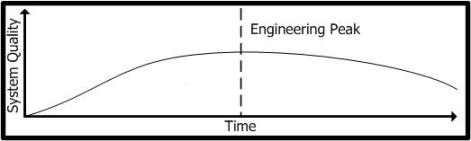

I have observed a pattern (or anti-pattern) in IT engineering that looks something like this:

Let’s call this the arc of engineering, since that’s more compact and elegant than the strangely shaped curve with what appears to be a single inflection point of engineering. “Arc” in any case conveys the essential sense: that system quality (however you wish to define that) rises over time to a peak value and then starts to decline.

This came to mind because I recently put a new hard drive in my laptop and have been going through the tedious drill of reinstalling all the various applications and utilities that I am used to using. With some software, I want — or have to use — the latest versions. So, for example, my versions of Firefox, Thunderbird, Quicken, and so on are all current, not to mention my anti-virus/firewall software.

For others, however, I either don’t need — or specifically don’t want — the newest versions. The laptop’s restore disks installed Windows XP, for which I’m grateful; I have no plans whatsoever to upgrade to Vista. Similarly, I installed MS Office 2003; I don’t own and don’t plan to buy Office 2007. As far as I can tell, Microsoft pretty much hit the top of its engineering arc for both Windows and Office a few years ago. In fact, the early to mid-2000s may well represent the peak of quality, features, and user acceptability for these product lines. Given the on-going end-user unhappiness with Vista and Office 2007 — and the corporate resistance to upgrades to either — Microsoft’s user base may never again be as happy with these product lines as they were a few years ago.

This is not an anti-Microsoft screed, because I have observed a similar effect in other companies and product lines. For example, I was a very early Macintosh adopter and champion, though I also was quite critical of its initial limitations. Apple continued to make improvements and changes to the Macintosh product line and finally reached what I considered at the time to be a close-to-perfect Mac: the Macintosh IIcx. It was solid, reliable, and very stable. Apple followed up with the Macintosh IIci, which used the same form factor, but offered several improvements over the IIcx, though there were some software stability problems due to the rewritten ROM and related hardware changes.

And then came the Macintosh IIfx, which was supposed to be even better — but the IIfx soon became well known for its hardware problems, in particular its tendency to blow out power supplies. Other Mac II models — the IIvi, IIsi, and IIvx — each had their own problems and limitations. From there, Apple descended into the product-line chaos known as Quadra/Centris/Performa, a marketing disaster from which Apple would not recover completely until Steve Jobs came back to Apple and introduced the iMac.

In this same time period, Apple had to deal with the fact that its operating system — System 7, introduced in 1991 — was under-featured, underwhelming, and built on aging technology. System 7 would lose its graphical UI edge with the introduction in 1992 of MS Windows 3.1 and would pretty much lose the operating systems wars completely a few years later with the introduction of MS Windows 95. It would not be until Apple’s introduction in 2002 of the Jaguar (10.2) version of Mac OS X — a heavily updated and retooled version of the NeXTstep OS — that Apple would regain technical and UI leadership in operating systems.

This same pattern can appear in internal IT projects. The system under development goes into production and continues to improve over time. Then at some point, the system’s overall quality (functionality, performance, reliability) starts to decline and maintenance costs begin to go up. At some point the organization decides to replace or re-engineer the system, sometimes successfully, sometimes not.

So, why does this happen? I believe several factors are or can be involved. (To simply the discussion, I’ll use “system” to mean the software, IT system, product, or product line under discussion; likewise, I’ll use “developer” to mean the company and/or IT development group responsible for creating, enhancing, and maintaining the system.)

The developer loses conceptual control of the system. In other words, no person or group of people understands the overall architecture, design, and operating constraints of the system. Thus the developer can no longer ensure that on-going changes to the system are consistent and compatible with existing aspects of the system or with other changes being made. This in turn can come about for several reasons:

- The system grows too large and complex. Sheer code size is not necessarily a problem (though it doesn’t help); on the other hand, the degree of fragmentation and interdependencies can be. (See this Vista post-mortem for some examples.)

- Those who really understand the system have left or moved on to other projects. The best and brightest IT engineers — particularly architects and designers — usually prefer to work on new systems. That means once this system has shipped (or gone into production), the people who created the system in the first place and who understand it best may well want to move on to other things, inside or outside of the company. Those who replace them — and those who stay behind — may be quite talented but may not understand all the system’s nuances and requirements. Eventually, they may decide to move on as well. With each generation of turnover, more institutional memory is lost.

- The development organization no long meshes with the system. Conway’s law states that “any piece of software reflects the organizational structure that produced it.” Since the IT group responsible for the system often changes once the system ships or goes into production, the organization of the new group responsible for the system may not match the system’s structure, causing gaps in knowledge and responsibility, plus a tendency — conscious or not — to reshape the system to match the new organization.

Software rot sets in. Software rot is a real term of art within software engineering, referring to the well-known tendency of systems in production to become less reliable over time for a variety of reasons having mostly to do with piecemeal and uncoordinated changes to the system, or growing incompatibilities with external systems.

The enhanced system finally outgrows its original foundation. When the developer seeks to expand or improve the system, it often does so without properly rearchitecting and redesigning the system first. As a result, the original system eventually may be stressed beyond its original intent and begins to suffer from functionality, reliability and/or performance problems. Mike Parker, one of the co-founders of Pages Software Inc. (read the 3rd paragraph), once described this syndrome as “trying to build a castle on the foundation of an outhouse.”

Market or business needs shift beyond the product’s fundamental design. A developer creates and enhances a system to meet a specific set of business/market requirements. However, there often comes a time when new or expanded requirements exceed the capabilities of the system’s original architecture and design. This can lead to the “outgrowing the foundation” situation described above, with the resulting problems. Or it can lead to a true re-engineering or replacement effort, which may well lead to the final two problems below.

The developer begins to add “blue sky”/”kitchen sink” enhancements. Once the “door is open” on changes to the system — or features for the new/re-engineered system — the requests pour in from all directions. Without rigorous control over these enhancements, the development effort can quickly bloat in every aspect: architecture, design, code size, schedule, staff, and defects.

Backward compatibility is maintained at all costs. Supporting backwards compatibility in a system can be a bit like trying to drive a car while pedaling a wheel at the same time. Apple abandoned its old arc of engineering and created a new one under Mac OS X by first forcing binary backwards compatibility into a separate mode (the “Classic” environment) and then dropping the Classic environment altogether for Intel-based Macs running Mac OS X. Microsoft — which has struggled with the backwards compatibility issue for almost its entire OS life — has announced that for “Windows 7”, it will in effect follow Apple’s lead by not supporting binary (and to a certain extent source) backwards compatibility in Windows 7 but instead using virtualization technology (switching to a backwards-compatible OS) for older application.

I’m sure there are other reasons I haven’t listed here, but it does show why the arc of engineering exists. Comments? ..bruce..

This arc definitely exists – some authors refer to it as software deterioration – a synonym of rotting, different in meaning from the mechanical erosion. I would rather think of it as a natural property of information, just like the entropy in the Universe. How can you fight a thing like this?

Alan Kay wrote a proposal about reinventing the programming – http://irbseminars.intel-research.net/AlanKayNSF.pdf

The idea is to use small number of very powerful concepts with the help of which a programmer can express solutions and knowledge domains briefly in a self explanatory and self learning way, thus reducing the size of the code-base, but also complexity and difficulties introduced by changes in system requirements – difficulties like emergence of contradictory elements in the knowledge domain.

While I desire with all my heart for such reinvention of programming to come true, I really doubt that even this can reverse software deterioration.

Anyway, you gave really nice samples of concrete engineering arcs!