The Wetware Crisis: the Thermocline of Truth

[Updated 09/12/13 — fixed some links and added a few.]

[Copyright 2008 by Bruce F. Webster. All rights reserved. Adapted from Surviving Complexity (forthcoming).]

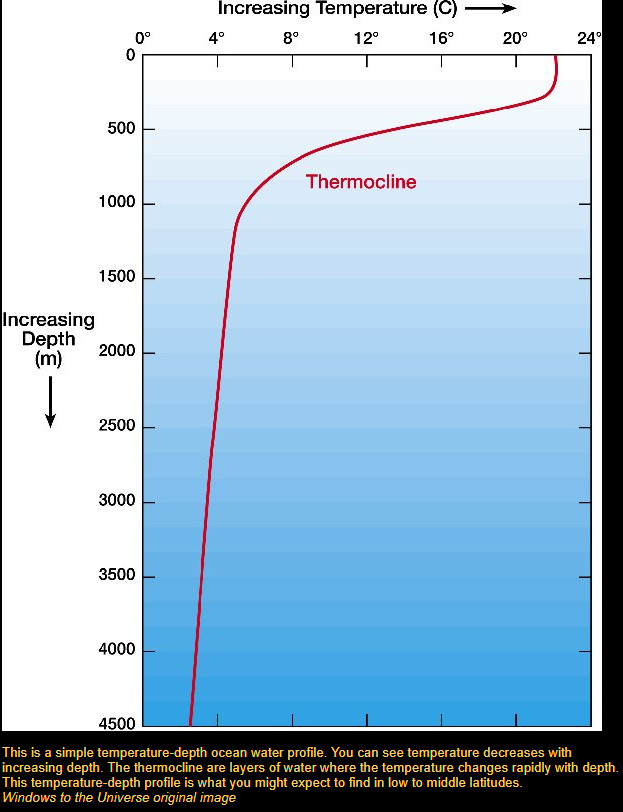

A thermocline is a distinct temperature barrier between a surface layer of warmer water and the colder, deeper water underneath. It can exist in both lakes and oceans. A thermocline can prevent dissolved oxygen from getting to the lower layer and vital nutrients from getting to the upper layer.

In many large or even medium-sized IT projects, there exists a thermocline of truth, a line drawn across the organizational chart that represents a barrier to accurate information regarding the project’s progress. Those below this level tend to know how well the project is actually going; those above it tend to have a more optimistic (if unrealistic) view.

Several major (and mutually reinforcing) factors tend to create this thermocline. First, the IT software development profession largely lacks — or fails to put into place — automated, objective and repeatable metrics that can measure progress and predict project completion with any reasonable degree of accuracy. Instead, we tend to rely on seat-of-the-pants (or, less politely, out-of-one’s-butt) estimations by IT engineers or managers that a given subsystem or application is “80% done”. This, in turn, leads to the old saw that the first 90% of a software project takes 90% of the time, and the last 10% of a software projects takes the other 90% of the time. I’ll discuss the metrics issue at greater length in another chapter; suffice it to say that the actual state of completion of a major system is often truly unknown until an effort is made to put it into a production environment.

Second, IT engineers by nature tend to be optimists, as reflected in the common acronym SMOP: “simple matter of programming.” Even when an IT engineer doesn’t have a given subsystem completed, he tends to carry with him the notion that he whip everything into shape with a few extra late nights and weekends of effort, even though he may actually face weeks (or more) of work. (NOTE: my use of male pronouns is deliberate; it is almost always male IT engineers who have this unreasonable optimism. Female IT engineers in my experience are generally far more conservative and realistic, almost to a fault, which is why I prefer them. I just wish they weren’t so hard to find.)

Third, managers (including IT managers) like to look good and usually don’t like to give bad news, because their continued promotion depends upon things going well under their management. So even when they have problems to report, they tend to understate the problem, figuring they can somehow shuffle the work among their direct reports so as to get things back on track.

Fourth, upper management tends to reward good news and punish bad news, regardless of the actual truth content. Honesty in reporting problems or lack of progress is seldom rewarded; usually it is discouraged, subtly or at times quite bluntly. Often, said managers believe that true executive behavior comprises brow-beating and threatening lower managers in order to “motivate” them to solve whatever problems they might have.

As the project delivery deadline draws near, the thermocline of truth starts moving up the levels of management because it is becoming harder and harder to deny or hide just where the project stands. Even with that, the thermocline may not reach the top level of management until weeks or even just days before the project is scheduled to ship or go into production. This leads to the classic pattern of having a major schedule slip — or even outright project failure — happen just before the ship/production date.

Sometimes, even then management may not be willing to hear or acknowledge where things really are but instead insist on a “quick fix” to get things done. Or management will order the project to be shipped or put into production, at which point all parties discover (a) that the actual business drivers and requirements never successfully made it down through the thermocline to those building the system, (b) that there are serious (and perhaps fatal) quality issues with the delivered systems, and thus (c) that the delivered project doesn’t do what top management really requires.

[INSERTED – 04/30/08]

Since Jerry Weinberg (see comments) and others have disputed that the thermocline is “distinct”, let me insert two real-world examples that I have personal knowledge of from over a decade ago. Both examples involve Fortune 100 corporations that were undergoing Y2K remediation across the entire enterprise. In the first case, the corporate Y2K coordinator had a weekly meeting with the heads of ~20 divisions and departments within the corporation in which those senior executives would report on their division/department’s Y2K remediation status with a green/yellow/red code. Four weeks before Y2K remediation was scheduled to be completed, virtually all the division/departments were reporting green, with a few yellows. Just one week later — three weeks before remediation was to be completely — almost all the department/division heads suddenly reported their status to be yellow or red. The Y2K coordinator (who told me about the meeting right afterwards) looked around the room and asked, “So, what do you know today that you didn’t know a week ago?” No one had much of an answer.

A year later, I was asked by a major corporation to come in and review their Y2K remediation because almost exactly the same thing had happened: almost all the departments/division had been reporting each week that they were on schedule to complete their Y2K remediation until roughly two weeks before the remediation was supposed to be completed — and then suddenly about 70% of the departments/divisions said they weren’t going to be done on time. The mass shift from “on schedule” to “not on schedule” took place in exactly one week and happened just a few weeks before the deadline. I came in, interviewed some 40 people (under strict confidentiality, in spite of pressure from top management to reveal who said what), and wrote up an honest assessment of where things stood, with a plan for getting things done. The corporation then asked me to come in and implement that plan, so I ended up commuting over 2000 miles/week (back and forth) for 2-3 months to do just that.

I have seen the same pattern repeatedly in IT systems failure lawsuits I have worked on, particularly when I’ve had large numbers of internal e-mails and memos to review. At times, I can identify right where the thermocline is and how it creeps up the management chain as the deadline draws near. In such cases, it usually doesn’t reach the top of the management chain (which, in the case of these lawsuits, means the developer notifying the customer) until shortly (<1 month) before the reported deadline. In fact, this syndrome goes hand-in-hand with the IT system failure lawsuit pattern I call “The Never-Ending Story“.

[06/16/08]: In fact, here’s a real-world IT project review memo, written several years ago, that described a “thermocline of truth” with a very distinct and discrete boundary.

In short, Jerry’s arguments notwithstanding, I’ve seen the thermocline of truth, I’ve seen it be very distinct, and I’ve seen it work its way up the management chain — just as I’ve described. I’m not writing this to be clever or glib; I’m writing it because it really happens.

[END INSERTION]

Successful large-scale IT projects require active efforts to pierce the thermocline, to break it up, and to keep it from reforming. That, in turn, requires the honesty and courage at the lower levels of the project not just to tell the truth as to where things really stand, but to get up on the table and wave your arms until someone pays attention. It also requires the upper reaches of management to reward honesty, particularly when it involves bad news. That may sound obvious, but trust me — in many, many organizations that have IT departments, honesty is neither desired nor rewarded.

I know that first hand. I can think of one project — being developed by one firm (the one that retained me) for another company (the customer) — where I was in on a consulting basis as a chief architect. In the final planning meeting before submitting the bid to the customer, the project manager set forth an incredibly aggressive and unachievable schedule to be given to the customer. I objected forcefully in the meeting — after all, we didn’t even have an architecture yet, much less a design, yet the project manager already had a fixed completion date — and later that afternoon, I wrote up a memo listing thirteen (13) major risks I saw to the project. While some of the engineers on the project cheered the memo, management told me in so many words to shut up and architect.

However, less that two months later, I wrote a new memo — based on the old one — and pointed out that 12 of the 13 risks I had pointed out had actually come to pass. Shortly after that, the project manager had to go back to the customer with a new delivery schedule that was twice as long as the original one. A month or two after that, my role as an architect came to an end. I had a final lunch with the two head honchos in upper management, and to their credit, they asked for my final assessment. I told them that many of the bumps and potholes were just part of the software development process — but that they should never have given that blatantly unrealistic schedule to the customer. As I told them, “When you do something like that, in the end you look either dishonest or incompetent or both. And there’s no upside to that.”

A few months after I left, I got word that the schedule had slipped to three times the original length, and not long after that, I got word that the customer had canceled the project altogether. As I said: just no upside.

[UPDATE: 09/12/13]: Here’s a $1 billion failed USAF project that appears to have largely foundered on the thermocline of truth.

[For a discussion of where the thermocline analogy originally came to me, see this post at And Still I Persist.]

About the Author: bfwebster

Comments (31)

Trackback URL | Comments RSS Feed

Sites That Link to this Post

- Pitfall: Asking the wrong questions : Webster & Associates LLC | April 27, 2008

- Some thoughts on “Up or Out” : Bruce F. Webster | April 29, 2008

- Gender differences in coding styles? : Bruce F. Webster | June 9, 2008

- Anatomy of a runaway IT project : Bruce F. Webster | June 16, 2008

- The Termocline of Truth | Corpus Scriptorum Crumbum | June 17, 2008

- The Human ESB at vedovini.net | June 19, 2008

- The thermocline of truth — at NASA : Bruce F. Webster | August 26, 2008

- The thermocline of innovation (NASA, again) : Bruce F. Webster | January 30, 2009

- links for 2010-11-09 « AB's reflections | November 9, 2010

- The Thermocline of Knowledge : Bruce F. Webster | April 8, 2011

- Rupert Jones » Archive » Potemkinism | June 1, 2011

- RISE: The Psychology of Computer Programming (Gerald M. Weinberg, 1971/1998) : Webster & Associates LLC | May 21, 2012

- More IT failure news from England : Webster & Associates LLC | September 4, 2013

- $1 billion example of the Thermocline of Truth : Webster & Associates LLC | September 9, 2013

- Obamacare and the Thermocline of Truth : And Still I Persist… | September 26, 2013

- Obamacare: descent into the maelstrom : And Still I Persist… | October 9, 2013

- Thermocline of truth | Internet Scofflaw | October 25, 2013

- Thermocline of Truth | Senior DBA | February 27, 2015

- Teaching CS 428 (Software Engineering) at BYU : Bruce F. Webster | January 12, 2017

- “The Surgical Team” in XXI Century | Tech Programing | January 4, 2021

- Why Software Companies Die | Tech Programing | January 5, 2021

- The Mind of David Krider | July 19, 2021

It’s an honor to get critiqued by someone like Jerry Weinberg, one of my personal IT heroes — I own a dozen or so of his books, all of which I’ve read.

It’s even more fun when I think he’s wrong, or at least a bit sloppy, in his critique. To wit:

Actually, “distinct” was applied to the oceanographic definition, not necessarily the IT definition. But even so, it is my experience that when an IT project is in trouble in a large organization (the premises I set forth), there is a relatively distinct layer — usually no more than one layer of management — that forms the thermocline. Most of those below the layer are pretty convinced the project is in trouble; most of those above are convinced the project is doing well. As the project nears its deadline, that thermocline moves up the organization chart.

That said, I don’t disagree (and, in fact, my article agrees) that the fudging per se tends to take place at each level — my observations stands, however, that there often is a distinct layer where the troubled/not troubled flip occurs.

Two errors here on Jerry’s part. First, I never stated that metrics would fix the problem; I merely noted that the IT industry lacks “automated, objective and repeatable metrics” that can predict when an IT project will be completed, which is precisely why misinformation gets passed up the chain. Second, “automated, objective and repeatable” by definition pretty much precludes “fudging”, since it describes metrics that upper management can run and review independent of lower management.

Again, Jerry apparently did not read the paragraph that said “Successful large-scale IT projects require active efforts to pierce the thermocline, to break it up, and to keep it from reforming. That, in turn, requires the honesty and courage at the lower levels of the project not just to tell the truth as to where things really stand, but to get up on the table and wave your arms until someone pays attention. It also requires the upper reaches of management to reward honesty, particularly when it involves bad news. That may sound obvious, but trust me — in many, many organizations that have IT departments, honesty is neither desired nor rewarded.”

First, that describes — in more detail — what Jerry is referring to and notes that it requires effort not just from upper management but from those in the trenches as well.

Second, Jerry’s comment assumes that upper management wants to know the honest truth. I know from first-hand experience — as I suspect Jerry does — that often upper management doesn’t want to hear any bad news, they simply want the system to somehow go into production, magically or otherwise. (I personally dealt with this while reviewing a half-billion dollar project that was two years late and nowhere near completion.)

In other words, when a thermocline of truth forms, it is usually because upper management doesn’t want to hear the truth — they just want good news. As Jerry knows well (and has written about), few large organizations are set up to reward failure and honesty. ..bruce..

You wrote: “It’s an honor to get critiqued by someone like Jerry Weinberg, one of my personal IT heroes — I own a dozen or so of his books, all of which I’ve read. It’s even more fun when I think he’s wrong, or at least a bit sloppy, in his critique.”

I appreciate your feedback pointing out my sloppiness. I think blogs may tend to encourage sloppiness in me. I’ll try to do better as I clarify our areas of disagreement, which are small compared to the whole problem of lying about project progress, which we obviously agree on, even if we don’t agree precisely on the solutions.

You wrote:

“… I don’t disagree (and, in fact, my article agrees) that the fudging per se tends to take place at each level — my observations stands, however, that there often is a distinct layer where the troubled/not troubled flip occurs.”

Well, we work in different organizations, so it’s natural that we’d observe different patterns. I’m sure your observations are accurate for the organizations with which you work. In my experience, there may be places where the troubled/not troubled flip occurs, but it’s more an individual choice of managers at different levels in different departments. But there might be some correlation in levels brought about by roughly the same amount of fudging at each level. (There tends to be a limit to the amount of fudge any manager can do, and that limit tends to be constant throughout the organization.)

You wrote: “First, I never stated that metrics would fix the problem; I merely noted that the IT industry lacks “automated, objective and repeatable metrics” that can predict when an IT project will be completed, which is precisely why misinformation gets passed up the chain.”

Fair enough. I read too much into this: the implication that such “automated, objective and repeatable metrics” were somehow within reach, so that people should use them. I you and I we agree that they aren’t available (in spite of claims) to most organizations today. Certainly not the ones who fudge, fudge, fudge their project data.

Then you say, ‘Second, “automated, objective and repeatable” by

definition pretty much precludes “fudging”, since it describes metrics that upper management can run and review independent of lower management.’

Well, that could be taken as a definition, but maybe you really believe this kind of measurement system is easy to find, or even possible.

[As an added note, I have a chapter in my forthcoming book

entitled “Lies, Damned Lies, and Metrics”.]

[As an added note, I have an entire forthcoming book entitled “Perfect Software: and other fallacies about testing.” Apparently we both feel that this field is rife with lies and fallacies and myths.]

I wrote: “If there is a “solution” to this problem, it requires higher levels of management to drop down through the levels and do some validation personally. This is not easy, for many reasons, but the best managers do this. Much of my work as a consultant to upper managers is exactly this kind of validation, bypassing the fudging layers.”

You replied: “Again, Jerry apparently did not read the paragraph that said “Successful large-scale IT projects require active efforts to pierce the thermocline, to break it up, and to keep it from reforming. That, in turn, requires the honesty and courage at the lower levels of the project not just to tell the truth as to where things really stand, but to get up on the table and wave your arms until someone pays attention.”

I disagreed with your “pierce the thermocline” metaphor, obviously, because I didn’t agree with your thermocline metaphor to begin with. Where I’ve worked, there’s no thermocline to pierce, but a lot of layers of management to bypass and go straight to the source of the data.

And, I don’t expect a “thermocline” organization to magically produce “honesty and courage” at the lower levels. It’s the job of the upper management to create an environment where a whole lot of courage isn’t required for people to be honest. And that may require a lot more work than people imagine. [see my article with Jean McLendon, “Beyond Blaming,”

http://www.ayeconference.com/beyondblaming/ .

Also see my article “Destroying Communication and Control in Software Development”

http://www.stsc.hill.af.mil/crosstalk/2003/04/weinberg.html

You wrote: “It also requires the upper reaches of management to reward honesty, particularly when it involves bad news. That may sound obvious, but trust me — in many, many organizations that have IT departments, honesty is neither desired nor rewarded. … First, that describes — in more detail — what Jerry is referring to and notes that it requires effort not just from upper management but from those in the trenches as well.”

Here we totally agree. But generally, the effort will die if upper management is not proactive. That’s what they’re supposedly paid for.

You then write: “Second, Jerry’s comment assumes that upper management wants to know the honest truth. I know from first-hand experience — as I suspect Jerry does — that often upper management doesn’t want to hear any bad news, they simply want the system to somehow go into production, magically or otherwise.”

We may be in agreement here, but perhaps differ on the definition of “upper management.” If you’re talking about to upper management in the IT department, then I agree. But when I deal with the entire organization’s top management, outside of IT, one of the most intense questions they ask is, “How can I get honest reporting out of IT projects?” To them, IT is just one component of a successful organization, and a component they usually can’t understand on their own. They know they cannot do their job with underlings who lie, but they don’t know how to change this dynamic, whether it be thermoclines or fudgings. And I have to tell them is that the only way to do that is to bypass the cline or the fudge and “get to the bottom of things.” That’s why they’re paid those fabulous salaries.

Jerry:

I’m honored (honest!) that you’d take the time to come over here and expand on your critique; it’ll all help with the final product. I think we’re largely in violent agreement here, with the exception of whether thermoclines exist in organizations. I’ve seen them personally, usually when I’ve been called in to review a troubled project (though also when I’ve worked on as an expert on IT systems failure lawsuits). In other words, I’ve been able to pinpoint the layer at which the ‘flip’ occurs.

And, no, I don’t think that “automated, objective, repeatable metrics” are easy, and I’m not entirely clear they exist.

I look forward to your new books, as always! ..bruce..

I’ve run across similar problems in managing large, complicated

systems and one explanation I came up with can be explained by

a crude mathematical model.

Suppose we have a perfectly spherical elephant, whose mass may

be neglected … er, no suppose we have an organisation with

four levels of management. The lowest level is the one dealing

with the hardware, or the environment, and is therefore the level

with the most accurate and complete knowledge of reality. Let’s

say (for ease of calculation) that things are coasting along,

there’s equal amounts of good news and bad news at the coalface.

Now generally, people like to hear good news, and dislike to hear

bad news; in extreme cases, a “shoot the messenger” policy can be

in force. So it’s only human nature that reports of good news get

a bit exaggerated, and reports of bad news get trimmed back a touch.

Not by much; to make the maths easier, say good news gets increased

by 10 percent on each reporting, and bad news is reduced by the same

amount.

So after three levels of reporting, with equal amounts of good news

and bad news coming into the organisation, the man at the top hears

1 x 1.1 x 1.1 x 1.1 units of good news, and 1 x 0.9 x 0.9 x 0.9 units

of bad news; 1.33 to 0.73, or nearly twice as much good news as bad.

“Hey”, says the boss, “things are going really, really well.”

This, of course, assumes that information travels only through regular

channels, and doesn’t explain the thermocline effect, but does explain

most of the actual examples I’ve seen in managing large systems. My

(partial) cure was to be very pleased if anyone told me any bad news,

another approach would be to develop accurate reporting systems that

bypass multiple levels, but that would greatly upset the managers thus

bypassed. The real answer is to hire competent managers, but that’s

not always an option.

C W Rose

Simply the best article Ive ever read.

So much so I had to blog about it

http://stevefouracre.blogspot.co.uk/2012/09/this-best-article-about-it-i-simply.html

This principle is covered by this well-known story:

http://ogun.stanford.edu/~bnayfeh/plan.html

“It is a vessel of fertilizer, and none may abide its strength.”

“… but that they should never have given that blatantly unrealistic schedule to the customer. ”

They would not have gotten the contract without the blatantly unrealistic schedule.

“There are products that you shouldn’t develop, companies you shouldn’t challenge, customers you shouldn’t win, markets you shouldn’t enter, recommendations from the board of directors you shouldn’t follow.” — me (The Art of ‘Ware, M&T Books, 1995)

Thank you for sharing Bruce. This article really struck home for a couple of reasons. I am constantly trying to direct my management in what it takes to successfully develop IT projects. In the end I still am still failing to deliver metrics that management approves of and understands. My management has limited IT background, which has presented a greater challenge in my career. As part of my job I realize that this this is something I need to improve upon, not management. Coincidently, part of my groups responsibility has involved us writing algorithms and software related to thermoclines in water bodies…